Prompt Engineering

What is Prompt Engineering?

“Prompt engineering” refers to the crafting of specific requests or instructions to interact with AI models, such as ChatGPT. The term “prompt” describes the text or command given to an AI model to perform a particular task. This prompt should be designed in such a way that the user receives the results and answers they are aiming for.

Goal of Prompt Engineering: Improving the Performance of AI Models

The main goal of prompt engineering is to improve the interaction between humans and AI. This is achieved by optimizing the way instructions or requests are formulated.

What Are the Goals of Prompt Engineering?

- Precise Results: By skillfully phrasing prompts, it should be ensured that AI models clearly understand what is being asked and provide precise and relevant responses.

- Bias Reduction: The term “bias” refers to unwanted prejudices or distortions in the responses an AI model may give to a particular prompt. These can arise, for example, from linguistic, cultural, or gender-related nuances in the prompts. By consciously phrasing requests, it is possible to try to minimize these unwanted biases in the responses.

- Efficiency and Effectiveness: Prompt engineering makes it possible to increase the efficiency and effectiveness of AI models by aligning them more closely with the needs and expectations of users.

Prompt engineering enhances the performance, adaptability, and ethical responsibility of AI models, enabling AI technologies to be integrated more effectively and responsibly across various fields.

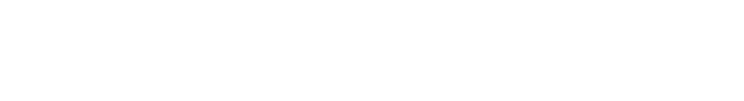

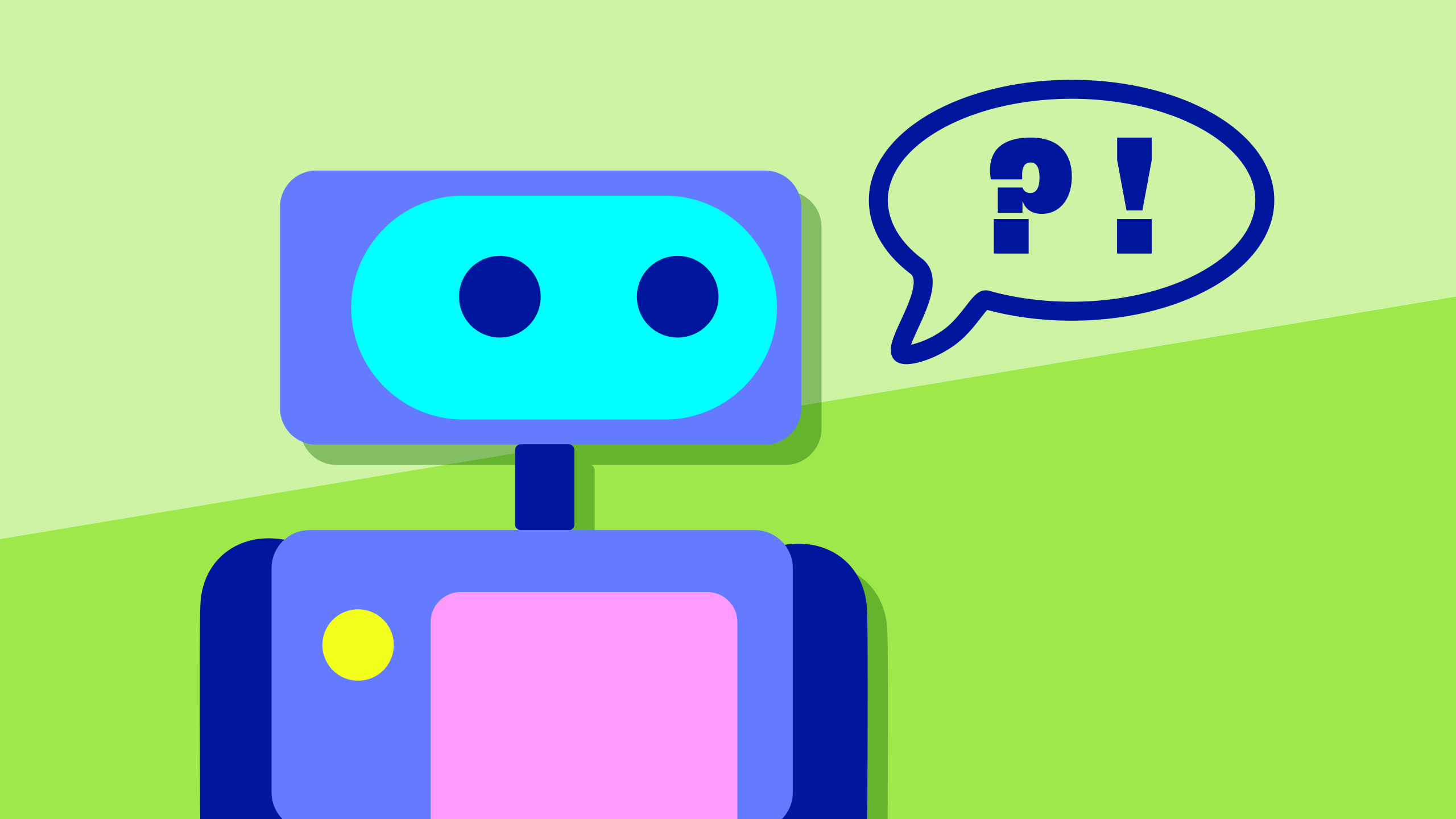

Prompt Engineering Tips: The Key Basics for Writing Effective Prompts

Prompt engineering can be a highly complex topic. Successful prompt engineering requires a deep understanding of how the model works, as well as its strengths and weaknesses. Nevertheless, there are some basic rules that should be followed when creating prompts:

- Precise Wording: Make sure your prompts are clear and precise. This helps avoid misunderstandings and ensures you get exactly the results you want.

- Contextualization: Add sufficient context to your requests so that the AI model can better understand the task. Clear contextual information helps to achieve more accurate answers.

- Iteration and Experimentation: Test different phrasings and experiment with various prompts.

- Avoiding Biases: Be mindful to minimize linguistic and cultural biases in your prompts. Phrase instructions as neutrally as possible to reduce prejudice in the responses.

- Task-Specific Prompts: Adapt your prompts to the specific use case. A prompt that works well for text generation may be less suitable for a translation.

- Documentation: Keep a record of the performance of different prompts. This not only improves traceability but also enables better analysis and optimization.

- Community Exchange: Share and discuss with others. Platforms like GitHub and forums can often help improve your prompt strategies.

Prompt Engineering Terms: Prompt Injection, Prompt Takeover & More

You may have already come across terms like prompt injection, prompt takeover, or prompt leak. Here’s what they mean:

- Prompt Injection: In prompt injection, new instructions or responses are inserted into a chatbot’s prompt set. Developers can use prompt injection to extend functionality and tailor the model to specific requirements. The goal is to improve the chatbot’s overall performance or integrate new features.

- Prompt Takeover: When an AI model takes over the conversation and processes requests without human intervention, this is referred to as a prompt takeover. It is often used in situations where predefined processes or frequently asked questions can be handled automatically. A prompt takeover can help reduce wait times and create a seamless user experience.

- Prompt Leak: A prompt leak describes a situation in which an AI bot reveals information that should not be shared with the user. Possible causes include flaws in the system’s design or implementation. Such leaks can compromise the security of user data.

- Reverse Prompt Engineering: Reverse prompt engineering aims to understand how AI models work. It involves analyzing how certain prompts lead the model to produce specific outputs. By experimenting and analyzing, one tries to determine which types of prompts generate the desired or undesired results. The goal is to decipher the model’s logic to better predict how it will respond to different phrasings.

Automated Prompts: The Future of Prompt Engineering?

What does the future of AI models and prompt engineering look like? How relevant will prompt engineering be? Artificial intelligence already plays a significant role in our world today and is expected to become even more important in the near future. Prompt engineering will also continue to evolve. One possible scenario is the automation of prompts: future developments could aim to automate the process of prompt engineering, for example, through advanced algorithms that automatically generate optimized prompts. Machine learning can be used to train models that continuously learn from the performance of various prompts. Based on feedback and results, these models could automatically improve their prompt-generation capabilities – increasing efficiency and making the use of AI models in various applications easier.

And How Does JUSTBLUE Prompt?

At JUSTBLUE, artificial intelligence has long been part of our daily work – not as a replacement for creativity, but as a tool that opens up new possibilities. We see particular potential in prompting: it’s our direct line to the AI and plays a decisive role in shaping the final outcome. Whether for idea generation, text development, or visual concepts, we use prompts deliberately to get to the point faster, structure complex thoughts, or spark new creative directions. It’s not about chance, but about finesse: how you ask determines the result.

Our internal AI core team regularly tests new tools and platforms, shares knowledge within the team, and works with all colleagues to develop a feel for how to use AI in a way that enhances, rather than replaces, our work. Prompting in this context isn’t just a buzzword – it’s real, hands-on practice.

FAQ

Prompt engineering refers to the process of designing or developing effective prompts for AI models such as GPT (Generative Pre-trained Transformer). It involves formulating clear and precise instructions to obtain the desired results from an AI model.

Prompt engineering is crucial for improving the performance of AI models. With precisely formulated instructions, users can control exactly what the model generates. A well-crafted prompt helps minimize unwanted or misleading results.

The consistency of model outputs can be maintained by carefully designing prompts and regularly reviewing the results. It’s also important to gather feedback and adjust the model if necessary.